Procedural content generation has fascinated me for a while, but until recently, I’d never had a project where it was an appropriate solution. After disappearing down the rabbit hole of making a content-rich indie FPS, I decided that my next project should have minimal content needs. This led me to start a new game, Second Order, which would follow some guidelines for maintaining scope.

- Infinitely large, pseudorandom, procedural world

- Time invested in developing the world provides infinite possibilities instead of only one static world

- Removes any potential for scripting, which saves development time and reinforces player-driven design goals

- Data-driven game configuration

- Procedural generation algorithms are defined in text files (which end users are free to modify)

- Entity definitions use a component-based or composition model and are also defined in text files

- ASCII graphics

- Very constrained visual tools

- Less potential to feature creep toward shinier graphics

- No audio

- This constraint makes me sad, because audio is often the secret sauce that makes a good game great

- But audio is not essential to the goals of Second Order, and it would likely create a dissonance with the low fidelity of the visuals

I was not too familiar with procedural generation considerations, and ended up solving a few problems which are probably quite common to this sort of game.

Because the world in Second Order is infinite, I cannot generate it all at once. Like other games with procedural terrain, I generate the world in chunks as the player approaches unexplored regions. This presents the first challenge: pieces of the world are pseudorandomly generated in an arbitrary order, yet must be consistent regardless of the order in which they are generated.

The solution to this problem is to not actually use any random calls during world generation. Instead, my noise functions are seeded at the start of the game and remain spatially consistent for its duration. (I won’t go into detail about noise functions here, as I feel those are well and thoroughly documented in other places; but if you are familiar with Perlin noise, I am simply referring to the initialization step of seeding the precomputed arrays with random values.)

The second challenge is that the terrain must be continuous across chunk boundaries, which effectively ruled out doing any kind of neighbor-aware multi-pass algorithms. For example, I might have wanted to do a two-pass generation where I first generate a smooth terrain, then erode it by simulating rainfall and soil displacement. But that kind of erosion algorithm is global. Each tile’s second pass value is dependent on its neighbors’ first pass values. At chunk boundaries, there may not be neighboring data (because the adjacent chunk is outside the player’s proximity sensor and has not been generated yet). This meant that multi-pass algorithms would need to extrapolate from available data to evaluate boundary tiles, which would produce discontinuities along the boundary when the further chunk is eventually generated.

My solution to this is simply to forbid multi-pass algorithms and develop techniques which produce desirable results in a single, (mostly) neighbor-independent pass. I find it useful in places to evaluate a subtree on a neighboring coordinate, as I will describe in more detail below. This works fine (because spatial consistency is guaranteed) as long as that subtree is not mutually dependent on the value of the local coordinate–that would cause infinite recursion.

Finally, as described in the guidelines above, I want the procedural generation algorithm to be not only data-driven but actually completely defined as content. The ambitious and unstated goal is to eventually provide Second Order as a platform for making new action games, so I don’t want there to be any assumptions in the code about the nature of the terrain.

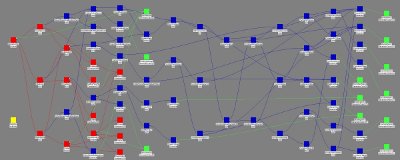

To that end, the code’s view of the algorithm is very generic. It essentially runs a functional programming “shader” on each tile in each chunk.

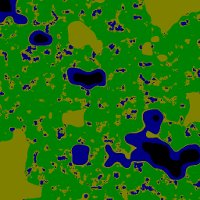

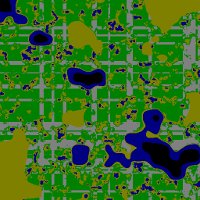

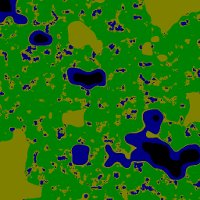

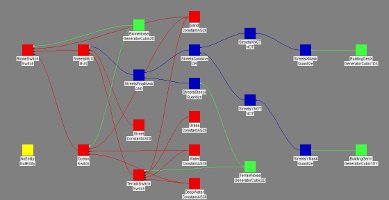

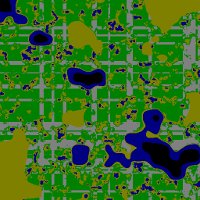

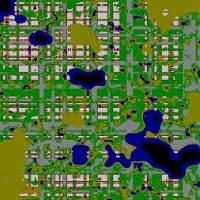

To illustrate this process, here are some examples of both organic and constructed terrain being generated in the engine.

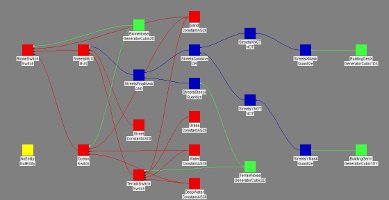

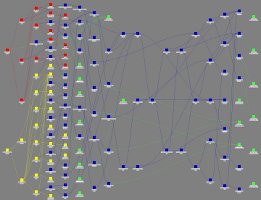

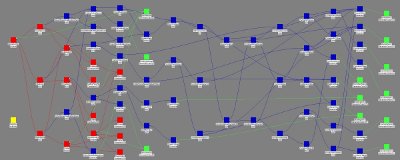

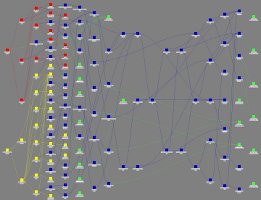

First, a simple terrain of sand, grass, and water is produced by thresholding two noise functions. At the bottom of the tree (on the right side of this graph) are the generator nodes. If speaking in terms of shaders, consider these as textures. They are the source of all subsequent values in the program. The green leaf nodes are generators which emit a floating point number, and the red leaf nodes emit a terrain struct containing visual and collision info. The switch nodes take a floating point as input, compare this value against some configurable thresholds, and pass through the value of the appropriate child node.

BiomeNoise is a low frequency noise generator which defines the macro landscape features: deserts, large bodies of water, and the grasslands in between. The output of this node is a floating point number which is used by the BiomeSwitch and Ocean nodes to select which of their children to evaluate.

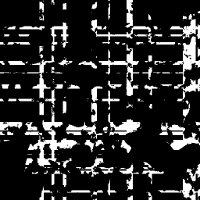

TerrainNoise is a high frequency noise generator which defines micro details within the grasslands: smaller bodies of water and sandy regions surrounding them. Note that the black spots in this image are not an artifact of the noise function; these are simply empty holes where the TerrainNoise function did not need to be evaluated (because the biome noise was above the desert threshold or below the ocean threshold, and BiomeSwitch selected its Ocean or Sand children instead of TerrainSwitch).

The switch nodes are used to combine the terrain structs according to the values of the noise functions, and the result is an infinite, non-repeating terrain.

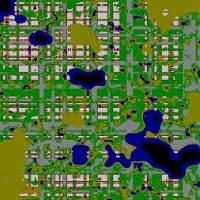

This is actually the terrain that Second Order is currently using, albeit without the streets and buildings layered on top of it. The method I use for generating man-made terrain produces rather unrealistic (regular and axis-aligned) features. As such, I don’t recommend this as a way to build interesting cities; instead, it is intended to show how complex procedural geometry can be built in a single-pass functional program.

This graph is similar to the simple terrain one, but now the midrange biome is a MUX (multiplexer, or Boolean switch) between streets and the grassland we saw above. The blue nodes are new, and indicate that the node has a Boolean output.

(Sharp-eyed readers may note that I’m not being as efficient with my Boolean operations as I could. !(A&B) would be one fewer operations than (!A)|(!B). I also wouldn’t need to use the NOT operators at all, except that I will later use those same generators for buildings, with streets filling in the negative space between the buildings. In any case, these generation shaders are relatively cheap, and I sometimes find it useful to have these masks of intermediate steps to reuse for future operations.)

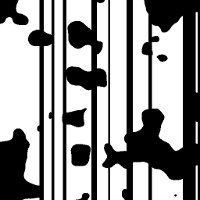

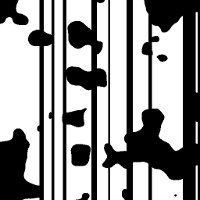

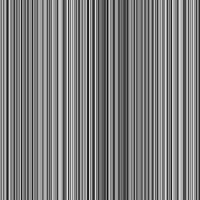

I begin by generating noise in one dimension. This generator is named BuildingGenX, and will later be used to generate buildings. As such, the streets will be formed from the inverse of this data, so as to fill in the space between buildings. (Note that, as before, the black holes in this and subsequent images are regions in which this function did not need to be evaluated.)

The floating point values are quantized at a given threshold to produce a Boolean mask. This represents the space which buildings might inhabit in this dimension…

…and that mask is inverted to produce the space in which streets will exist.

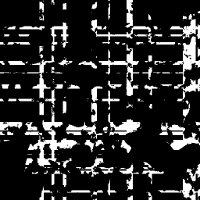

I repeat these same steps with another generator in the Y dimension and combine the results of each mask with an OR operator.

Separately, I produce a mask from the terrain noise generator. This represents areas which are sufficiently far from the sandy and watery regions in the grasslands biome.

These two masks are combined with an AND operator to produce the final mask for streets.

The MUX selects the street terrain info in all the spaces where the street mask is high, and fills in the rest with the grasslands terrain.

Finally, we want to create buildings in between the streets. Buildings present some new challenges, because I want interesting floorplans (not just rectangles in between the streets) and I want the walls to always be one tile thick.

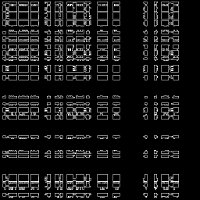

The graph has grown significantly here, but the patterns are the same as what we have seen before. Floating point numbers (green) are generated on the right, quantized into Boolean values (blue) and combined through the middle of the graph, and used to select the appropriate terrain info (red) at the left.

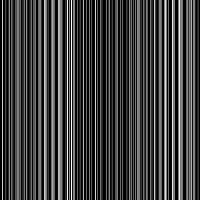

One new node I introduce here is a white noise generator in one dimension.

When quantized, this tends to produce thin lines which are ideal for corridors.

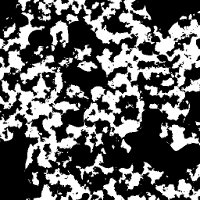

By generating and combining many corridor and room masks (in much the same way as the streets were created before), I produce this mask which represents the complete potential floorplan for all the buildings in the world.

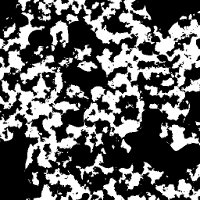

In order to generate walls of a consistent thickness, I use an outline operator. This is the neighbor-aware step I alluded to earlier. It evaluates the floorplan mask at its local coordinate and each adjacent coordinate and emits a boolean if its local value is false and it is adjacent to any tile whose value is true.

I then OR the floors and walls masks together and AND the result with a high frequency noise mask to produce this, the actual space in which buildings will be created. (The high-frequency noise is used here to create the appearance of damage or wear to the buildings, suitable for the weathered world of Second Order.)

Before drawing the walls and floors, I use another combination of the corridor and room masks to punch out doorways at the ends of some hallways.

A series of MUXes is used to select between the various parts of the midrange biome: terrain, streets, and buildings with its walls and floors. This is the actual terrain which I am using in the current version of Second Order.

The last part of my procedural generation is to place entities. I won’t explore that topic in depth, as the fundamental ideas are the same: generate some noise (in this case, two-dimensional white noise), filter it, and switch based on many of the same masks that are used to generate the terrain (so we don’t end up with cacti in the ocean, or enemies spawned in the walls of a building).

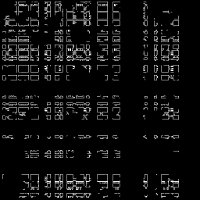

The complete graph for terrain and entity generation looks like this:

You can download Second Order now and edit the configuration files to experiment with its procedural generation. While the game is running, you can export the procedural generation graph with the G key, or export any nodes tagged with “ExportStage = true” by pressing Shift + G.