I have built most of my career on and around immersive games; in particular, that murkily defined subsection of first-person action games known as immersive simulations. That genre, defined by the titles of Looking Glass Studios, Ion Storm Austin, and Irrational Games, has experienced a minor resurgence during this generation. But the term “immersion” has also been co-opted as a buzzword for big budget games, where it may be used to mean anything from “atmospheric” to “realistic” to “gripping.”

Recently, Robert Yang wrote an article in which he suggested that “immersion” is now merely code for “not Farmville” and that we as developers should abandon the term.

Although I agree with Yang regarding the unfortunate overloading of the word “immersion,” I believe there is still value in using the word to describe that particular brand of games which evoke that particular kind of player engagement. I propose that instead of abandoning all concept of immersion, we establish a clearer understanding of what makes a game immersive.

Justin Keverne (of the influential blog Groping the Elephant) recently said to me: “I once asked ten people to define immersion, all ten said it was easy, all ten gave different answers.”

This is my answer.

Immersion not about realism

“Realistic” is an unfortunate appraisal in games criticism. Realism is a technological horizon: the infinitely distant goal of a simulation so advanced that it is indistinguishable from our reality. This is problematic for at least two reasons.

As a simulation approaches the infinite complexity of reality, its flaws stand out in increasingly stark contrast. This effect is customarily called the uncanny valley when referring to robotic or animated simulations of human beings; but I find Potemkin villages to be a more apt metaphor for its effect on video games, as a modern action-oriented video game needs to simulate much more than one human character. Games have advanced over the past two decades primarily along the axes of visual fidelity and scope, with very few games exploring the more interesting third axis of interactive fidelity. This arms race toward faux realism has produced a trend of highly detailed but static environments: doors and cabinets which cannot be opened, lights which cannot be switched off, windows that do not break, and props which are apparently fixed in place with super glue.

The second problem with the pursuit of realism is that reality is not particularly well-suited to the needs of a video game. Reality can often be dull, noisy, or confusing; it is indifferent to an individual and full of irrelevant information. A well-made level is architected, dressed, and lit such that it guides the player through its space. Reality is rarely so prescriptive; its halls and roads are designed to lead many people to many destinations. In fact, those non-interactive doors and lights which reveal the pretense of a game world are non-interactive specifically because they don’t matter. This paradox between the expectations of a realistic world and the prescriptive focus of a video game becomes more apparent as games move toward greater realism.

For these reasons, the pursuit of realism is actually detrimental to immersion.

Immersion is about consistency

In his postmortem for Deus Ex, Warren Spector described how the team at Ion Storm Austin cut certain game objects because their real-world functionality could not be captured in the game. It may not be realistic or even especially plausible that Deus Ex’s future world would lack modern appliances, but the decision to cut these objects unasked the more obvious questions about their utility.

Consistency in a game simulation simply means that objects behave according to a set of coherent rules. These rules are often guided by realism, because realism provides the audience with a common understanding of physical properties, but they are not beholden to it. Thief’s exemplary stealth gameplay is based on a rich model of light and sound, quantized into a small number of intuitive reactions. In BioShock, objects exhibit exaggerated responses to real world stimuli like fire, ice, and electricity; but also to fictional forces such as telekinesis.

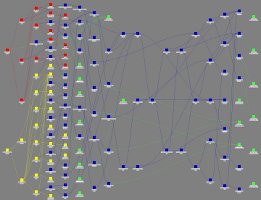

Adherence to a set of rules provides the opportunity for the player to learn and to extrapolate from a single event to a pattern of similar behaviors linked by these rules. In their GDC 2004 presentation Practical Techniques for Implementing Emergent Gameplay, Harvey Smith and Randy Smith showed how simulations with strongly connected mechanics may allow the player to improvise and accomplish a goal through a series of indirect actions. When a player is engaged in this manner, observing and planning and reacting, she is immersed in the game.

Immersion is broken by objects which behave unexpectedly or which have no utility at all. When a player attempts to use one of these objects, she discovers an unexpected and irrational boundary to the simulation–an “invisible wall” of functionality which shatters the illusion of a coherent world. Big budget games seem especially prone to this failure condition, as they contain large quantities of art which imply more functionality than the game’s simulation actually supports.

Immersion is a state of mind

As I suggested above, a player becomes immersed in a game when engaging with its systems with such complete focus that awareness of the real world falls away. Immersive sims, a more strictly defined genre, may exhibit certain common features such as a first-person perspective or minimal HUD. While these aspects may be conducive to player immersion, they are not strictly necessary; any game which produces this focused state of mind is immersive. (In fact, one of the most immersive games I have played recently is the third-person Dark Souls.)

Outside of video games, there is a term for this state of mind of complete focus and engagement: flow.

Immersive video games are those which promote the flow state via engaging, consistent mechanics, and sustain it by avoiding arbitrary or irrational boundary cases.

Further reading

Adrian Chmielarz recently published a piece criticizing the ways in which highly scripted games break immersion, and suggested a contract of sorts in which players would forego game-breaking behavior if developers would smooth over the edge cases that reveal the boundaries of a game’s scripting.

Casey Goodrow wrote a response to Chmielarz’s article in which he further highlighted the tense relationship between immersion and realism and argued that a player who breaks a game by boundary testing its systems is actually immersed in that game.